I’m taking the following as an axiom: Exposing real pre-emptive threading with shared mutable data structures to application programmers is wrong. …It gets very hard to find humans who can actually reason about threads well enough to be usefully productive.

When I give talks about this stuff, I assert that threads are a recipe for deadlocks, race conditions, horrible non-reproducible bugs that take endless pain to find, and hard-to-diagnose performance problems. Nobody ever pushes back.

October 26, 2009

Threading is Wrong

October 23, 2009

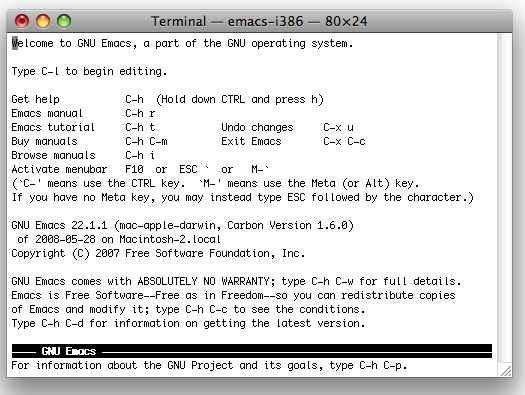

GUI is Dead, Long Live UI

The term GUI, Graphical User Interface, pronounced “Gooey” is laughably anachronistic. All interfaces meant for people on modern computers are graphical. The right abbreviation to use today is simply UI, for User Interface, pronounced “You I”.

Believe me, I understand that a command line interface is still useful today. I use them. I’m a programmer. I get the whole UNIX thing. Even without a pipe, a command-line is the highest-bandwidth input mechanism we have today.

But all command lines live inside a graphical OS. That’s how computers work in the 21st century.

Whenever I see “GUI” written I can’t help but wonder if the author is dangerously out of touch. Do they still think graphical interfaces are a novelty that needs to be called out?

October 20, 2009

Knuth can be Out of Touch

…Knuth has a terrible track record, bringing us TeX, which is a great typesetting language, but impossible to read, and a three-volume set of great algorithms written in some of the most impenetrable, quirky pseudocode you’re ever likely to see.

There, it’s been said. But let the posse note I wasn’t technically the one to do it!

JavaScript Nailed ||

One thing about JavaScript I really like is that its ||, the Logical Or operator, is really a more general ‘Eval Until True‘ operation. (If you have a better name for this operation, please leave a comment!) It’s the same kind of or operator used in Lisp. And I believe it’s the best choice for a language to use.

In C/C++, a || b is equivalent to,

if a evaluates to a non-zero value:

return true;

if b evaluates to a non-zero value:

return true;

otherwise:

return false;

Note that if a can be converted to true, then b is not evaluated. Importantly, in C/C++ || always returns a bool.

But the JavaScript || returns the value of the first variable that can be converted to true, or the last variable if both variables can’t be interpreted as true,

if a evaluates to a non-zero value:

return a;

otherwise:

return b;

Concise

JavaScript’s || is some sweet syntactic sugar.

We can write,

return playerName || "Player 1";

instead of,

return playerName ? playerName : "Player 1";

And simplify assert-like code in a perl-esq way,

x || throw "x was unexpectedly null!";

It’s interesting that a more concise definition of || allows more concise code, even though intuitively we’d expect a more complex || to “do more work for us”.

General

Defining || to return values, not true/false, is much more useful for functional programming.

The short-circuit-evaluation is powerful enough to replace if-statements. For example, the familiar factorial function,

function factorial(n){

if(n == 0) return 1;

return n*factorial(n-1);

}

can be written in JavaScript using && and || expressions,

function factorial2(n){ return n * (n && factorial2(n-1)) || 1;}

Yes, I know this isn’t the clearest way to write a factorial, and it would still be an expression if it used ?:, but hopefully this gives you a sense of what short-circuiting operations can do.

Unlike ?:, the two-argument || intuitively generalizes to n arguments, equivalent to a1 || a2 || ... || an. This makes it even more useful for dealing with abstractions.

Logical operators that return values, instead of simply booleans, are more expressive and powerful, although at first they may not seem useful — especially coming from a language without them.

October 19, 2009

Less is More

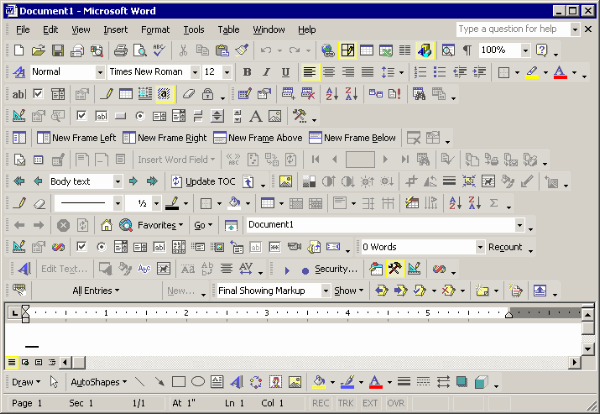

Fundamentally, a computer is a tool. People don’t use computers to use the computer, they use a computer to get something done. An interface helps people control the computer, but it also gets in their way. Inevitably, any on-screen widget is displacing some part of the thing the user is trying to manipulate.

As an infamous example, expanding Microsoft Word’s toolbars leaves no room for actually writing something,

(Screenshot by Jeff Atwood)

Users don’t want to admire the scrollbars. Truth be told, they don’t even want scrollbars as such, they just want to access content and have the interface get out of the way.

Show The Data

I highly recommend Edward Tufte’s The Visual Display of Quantitative Information. It’s probably the most effective book or cultivating a distaste for graphical excesses.

Tufte’s teachings are rooted in static print. But many of the principles are just as valuable in interactive media. (And static graphics are still very useful in analysis and presentation. Learning how to graph better isn’t a waste of time.)

Tufte’s first rule of statistical graphic design is, “Show the data” , and it’s an excellent starting point for interface design as well.

Cathy Shive has an excellent post expanding on Tufte’s term Computer Administrative Debris.

The Chartjunk blog showcases a few real-world examples of Tuftian redrawings.

Get Out of My Mind

Learning happens when attention is focused. … If you don’t have a good theory of learning, then you can still get it to happen by helping the person focus. One of the ways you can help a person focus is by removing interference.

–Alan Kay, Doing With Images Makes Symbols.

Paradoxically then, the better the design, the less it will be noticed. We should strive to write our interfaces in invisible ink.

sizeof() Style

Never say sizeof(sometype) when you can say sizeof(a_variable). The latter works even if the type of a_variable changes, and it is much more obvious what the size is supposed to represent.

October 16, 2009

Hack: Counting Variadic Arguments in C

This isn’t practical, but I think it’s neat that it’s doable in C99. The implementation I present here is incomplete and for illustrative purposes only.

Background

C’s implementation of variadic functions (functions that take a variable-number of arguments) is characteristically bare-bones. Even though the compiler knows the number, and type, of all arguments passed to variadic functions; there isn’t a mechanism for the function to get this information from the compiler. Instead, programmers need to pass an extra argument, like the printf format-string, to tell the function “these are the arguments I gave you”. This has worked for over 37 years. But it’s clunky — you have to write the same information twice, once for the compiler and again to tell the function what you told the compiler.

Inspecting Arguments in C

Argument Type

I don’t know of a way to find the type of the Nth argument to a varadic function, called with heterogeneous types. If you can figure out a way, I’d love to know. The typeof extension is often sufficient to write generic code that works when every argument has the same type. (C++ templates also solve this problem if we step outside of C-proper.)

Argument Count (The Good Stuff Starts Here)

By using variadic macros, and stringification (#), we can actually pass a function the literal string of its argument list from the source code — which it can parse to determine how many arguments it was given.

For example, say f() is a variadic function. We create a variadic wrapper macro, F() and call it like so in our source code,

x = F(a,b,c);

The preprocessor expands this to,

x = f("a,b,c",a,b,c)

Or perhaps,

x = f(count_arguments("a,b,c"),a,b,c)

where count_arguments(char *s) returns the number of arguments in the string source-code string s. (Technically s would be an argument-expression-list).

Example Code

Here’s an implementation for, iArray(), an array-builder for int values, very much like JavaScript‘s Array() constructor. Unlike the quirky JavaScript Array(), iArray(3) returns an array containing just the element 3, [3], not an uninitilized array with 3 elements, [undefined, undefined, undefined]. Another difference: iArray(), invoked with no arguments, is invalid, and will not compile.

#define iArray(...) alloc_ints(count_arguments(#__VA_ARGS__), __VA_ARGS__)

This macro is pretty straightforward. It’s given a variable number of arguments, represented by __VA_ARGS__ in the expansion. #__VA_ARGS__ turns the code into a string so that count_arguments can analyze it. (If you were doing this for real, you should use two levels of stringification though, otherwise macros won’t be fully expanded. I choose to keep things “demo-simple” here.)

unsigned count_arguments(char *s){

unsigned i,argc = 1;

for(i = 0; s[i]; i++)

if(s[i] == ',')

argc++;

return argc;

}

This is a dangerously naive implementation and only works correctly when iArray() is given a straightforward non-empty list of values or variables. Basically it’s the least code I could write to make a working demo.

Since iArray must have at least one argument to compile, we just count the commas in the argument-list to see how many other arguments were passed. Simple to code, but it fails for more complex expressions like f(a,g(b,c)).

int *alloc_ints(unsigned count, ...){

unsigned i = 0;

int *ints = malloc(sizeof(int) * count);

va_list args;

va_start(args, count);

for(i = 0; i < count; i++)

ints[i] = va_arg(args,int);

va_end(args);

return ints;

}

Just as you'd expect, this code allocates enough memory to hold count ints, and fills it with the remaining count arguments. Bad things happen if < count arguments are passed, or they are the wrong type.

Download the code, if you like.

Parsing is Hard, Let's Go Shopping

I didn't even try to correctly parse any valid argument-expression-list in count_arguments. It's non trivial. I'd rather deal with choosing the correct MAX3 or MAX4 macro in a few places than maintain such a code base.

So this kind of introspection isn't really practical in C. But it's neat that it can be done, without any tinkering with the compiler or language.

October 14, 2009

Misunderestimating the Cloud

Recently, a Microsoft datacenter lost thousands of mobilephone user’s personal data.

A common response to this story is that this kind of danger is inherent in “cloud” computing services, where you rely on some service provider to take care of your data. But this misses the point, I think. Preserving data is difficult, and individual users tend to do a mediocre job of it. Admit it: You have lost your own data at some point. I know I have lost some of mine. A big, professionally run data center is much less likely to lose your data than you are.

It’s easy to convince yourself of this anecdotally. Look around you, how many people people that you loosely know on Facebook have you seen complain about losing all their contacts when they lost their phone? I’ve seen at least a dozen such announcements. But nobody I actually know has been affected by this recent fiasco, or complained about losing contacts in any other “cloud” failure.

But people have a bias to overestimate risks they can’t control, and underestimate risks they can control. So we reinvent the wheel, and lose our own data ourselves.

Hey, I do it too. Actuarially, I really should be paying wordpress.com to manage this blog.

October 12, 2009

Don’t Check malloc()

There’s no point in trying to recover from a

mallocfailure on OS X, because by the time you detect the failure and try to recover, your process is likely to already be doomed. There’s no need to do your own logging, becausemallocitself does a good job of that. And finally there’s no real need to even explicitly abort, because anymallocfailure is virtually guaranteed to result in an instantaneous crash with a good stack trace.

This is excellent advice. Peppering your code with if statements harms readability and simplicity.

It’s still a good idea to check large (many MB) mallocs, but I can’t imagine recovering gracefully from a situation where 32 byte memory allocations are failing on a modern desktop.

October 6, 2009

‘Yum!’

I give Microsoft’s current Mac software some shit, but I think it’s deserved. So it’s only fair I mention their glory days.

From “Classic” Mac OS 8.1 in 1998 through Mac OS X 10.2 Microsoft’s Internet Explorer (for Mac) was the default web browser Apple chose for Mac OS. The very fist iMac? It came with IE:mac, just like the first version of Mac OS X.

And IE:mac wasn’t so bad, for it’s era. (It was the first browser to have color correcting PNGs, by the way!) There was one really neat feature that I think is worth calling out: it would match your iMac’s color, automagically.

Technical Details That I Only Half Remember

If you have a better understanding of how this worked, please let me know! I couldn’t find any details online. Mostly, I’m writing down what I remember before I forget.

The poorly named Gestalt function lets you check information about the Mac OS runtime, like “what version of Mac OS is this?“. You pass it a FourCharCode, and replies with a 32-bit value or an error code — old stuff from the “Classic” Mac OS days.

There was an undocumented code, 'yum!' 1, that returned the color of an the iMac or iBook case. IE:mac would check this when it first started, and choose a color scheme to match the operator’s Mac. It was a seamless personal touch that really impressed me.

It’s the sort of thing I’d like to see more of on today’s multi-colored iPods and iPhones.

1It might have been 'Yum!', I don’t remember exactly, and Gestalt() returns gestaltUndefSelectorErr, -5551, for all of variations on my MacBook Pro under Snow Leopard.