There’s a lot of discord over Apple’s draconian “closed” handling of the iPhone and App store. And rightly so. But there are a few interesting lessons in the current situation. The one I want to discuss now is that,

Being able to program your own computer isn’t enough to make it open

As things stand today, Apple can’t stop you from installing any damn iPhone app if you build yourself.

To do that you have to join the iPhone developer program of course. And there’s a $99/year fee. That’s inconvenient, but it’s just using a subscription-based way of selling iPhone OS: Developer Edition.

That’s the kind of dirty money-grabbing scheme I’d expect from Microsoft. It’s a bit shady, because it’s not how most OSes are sold. But it’s not without precedent. And unless you are against ever charging money for software, I don’t think there’s an argument that it’s actually depriving people of freedom.

Yes, it’s an unaffordably high price for many. But the iPhone is a premium good that costs real money to build — it’s inherently beyond many people’s means, even when subsidized.

Observation: Only Binaries Matter

If you have a great iPhone app that Apple won’t allow into the store, you can still give it to me in source code form, and since I have iPhone OS: Developer Edition, I can run it on my iPhone.

But clearly that’s not good enough.

In fact, I’m not aware of any substantive iPhone App that’s distributed as source. By “substantive” I mean an app with a lot of users — say as many as the 100th most downloaded App Store app — or an app that does something that makes people jealous, like tethering (See update!), which we know is possible using the SDK. I realize this is a wishy-washy definition — what I’m trying to say is that distributed-as-source iPhone Apps seem to be totally irrelevant.

“It’s not open until I can put Linux on it”

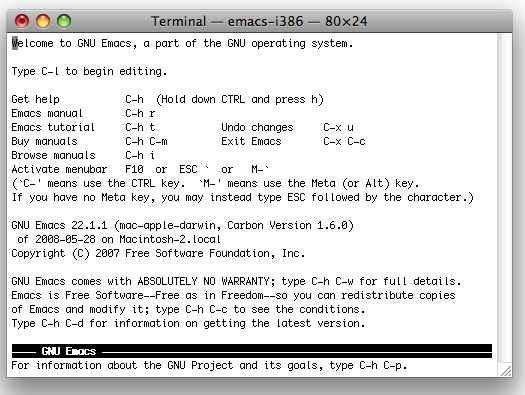

I believe it’s technically possible to run Linux on an iPhone without jail-breaking it. (Although it’s not terribly practical.) Just build Linux (or an emulator that runs Linux) as an iPhone app, and leave it running all the time to get around the limitations on background processes.

Apple won’t allow such a thing into the App Store of course —but how does that stop you from distributing the source for it? As best I can tell, it doesn’t.

So as things stand today, yes you can distribute source code that lets any iPhone OS: Developer Edition user run Linux. It’s technically challenging, but it’s doable.

Conclusion

It’s possible to build open systems on top of closed systems. We’ve done it before when we built the internet on Ma Bell’s back.

But the iPhone remains a closed device. User-compiled applications have 0 momentum. And I think that clearly shows the irrelevance of the rare “programmer user”, who is comfortable dealing with the source code for the programs he uses.

UPDATE 2010-01-21: iProxy is an open-source project to enable tethering! Maybe the programmer-user will have their day after-all.